As screens producer for the Super Bowl LV Halftime Show, Drew Findley of DF Productions collaborated with the creative and producing teams to design, develop, and deliver the screen content imagery and animation for the performance featuring The Weeknd. Findley also interfaced with both the onsite programming and LED tech teams to make sure that all the design intentions translated properly in Raymond James Stadium in Tampa, FL and on camera for millions watching at home. Live Design chats with Findley about the ins and out of this pandemic era project.

Live Design: What was the design philosophy / goals going into the project?

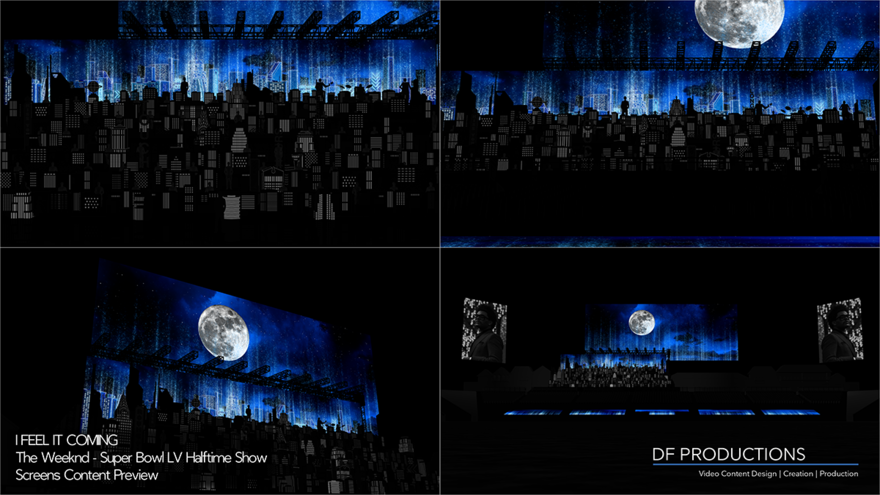

Drew Findley: The purpose of the main screen was to act as an extension for the set below it. The buildings in the screen were styled around the same foundational elements as the actual buildings below, but not so strictly that we wouldn’t create realistic elements, animated windows patterns, neon chases or hyperreal skies. For the 26 LED screens that surrounded the field, we used those to fill backgrounds in reverse shots and to create dynamics while the cast was on the field.

LD: What was the look you were going for?

DR: Each song was designed to have a different feel as we moved through the setlist. “Starboy” was intended to feel mysterious by not revealing the full city look. “The Hills” had a hazy, futuristic, dystopian feeling with smoke explosions, and “Save Your Tears” had a crisp and clean nighttime feel. The creative direction for the screen looks came through Es Devlin and La Mar Taylor on The Weeknd’s creative team and through collaborations with Al Gurdon, Bruce Rodgers and the producers.

LD: How does the video integrate with the set (and lighting), both artistically and technically?

DF: Early on, we knew that the video city would have to be able to reproduce some of the effects and lighting tricks that the scenic city was capable of. We took a lot of effort to make sure all our content buildings had the similar neon outlines that we could animate, as well as being able to chase and color change the windows, and play with directional lighting. As we got closer and all the departments were in pre-viz, all the collaborators worked hard to make sure we were telling the same color story and that our content was a natural extension of the lighting and vice versa.

LD: What steps needed to be taken to prime the production for both a partial in-person audience and a large at-home audience?

DF: There are definitely more people that watch the show on TV than in the stadium but a lot of work goes into making sure the in-stadium experience is a premium one. We do design and produce content for screens that are not seen on the broadcast except for a couple seconds in a song and sometimes not at all. For example, during “Can’t Feel My Face,” when The Weeknd was in the Infinity Light Room, the screens and lighting on the set were alive and animated only for the in-person audience.

LD: How did the camera angles impact design choices or logistics?

DF: It’s very important that the screen surfaces that appear in all the various shots help advance the feel of each number. This means that sometimes we need to bring the look in tighter and other times we need to expand it out across the stadium. Additionally, we had looks that required us to line up screens that were quite far apart. For example, during “I Feel It Coming,” we wanted the moon to rise from our main set screen onto the jumbotron in the stadium. To achieve this look, the moon had to seamlessly split both screens, which have about a 40′ depth difference. We worked with Hamish Hamilton, the director, to figure out exactly which camera was going to be used at that moment, then Dan Efros recreated that camera position in 3D software, and lined up the moon so it would look correct for that precise shot.

LD: What software did you use for content creation?

DF: Content was created by my company DF Productions. We used Maxon Cinema 4D, Octane, Adobe After Effects and Unreal Engine to create all the content in the performance. All of the content was then encoded and played back in FlexRes Lossless on the Green Hippo Tierra+ servers.

Video Gear

- 2 MA Lighting grandMA3 console

- 4 Green Hippo Tierra+ Media Server

- 6 Barco E2 frame

- 30x HD and 2x 4k feeds for all screens

- 300 Tiles of Roe CB8 (Blow threw for wind)

- 2 Brompton SX40 processors in Failover mode – secondary processor set up to take over if primary fails.

- All XD boxes in redundancy mode (one input and one on output)

- 2 Folsom Image Pro 4K for switching our 4K feeds from Prime to Backup.

Read full article – Live Design Online.

Image Credit – DF Productions.

Article Credit – Meghan Perkins, Live Design Online.